Generating Shakespearean Text using an RNN

Generated Text

of what might fall, so to prevent

the standed is at the earth her face of the streets;

and she is, you and there is no more thans

the roman be read, the world it did true.

what, with heavens, my lord, I am to thee,

to caesar’s spiring with all the bloody,

and for the winds, to live thee

🤖 This sentence is fully generated by an Artificial Inteligence 🤖

Deep Learning can be used for a lot of interesting projects and things. In this little study I’ve made, I will show how I trained a Recurrent Neural Network to create new text in the style of William Shakespeare.

I used Keras to help with development, but the same could be achieved with NumPy. I use the former for quick development. If after this article you want to learn more I urge you to read this great article from Niklas Donges.

Recurrent Neural Network Model

Although creating an RNN sounds complex, the implementation is pretty easy using Keras. The model RNN1 can be easily create with the following structure:

LSTM LayerDense (Fully Connected) LayerSoftmax Activation

The LSTM Layer will learn the sequence that it gathers from the text. The Dense Layer allows for one output neuron for each unique char. And the Softmax Activation transforms outputs to probability values.

To optimize the model gradients I used RMSprop and the Categorical Crossentropy Loss function to calculate the quantity that a model should seek to minimze at each training timing.

1

2

3

4

5

6

7

8

9

10

11

12

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.layers import LSTM

from keras.optimizers import RMSprop

model = Sequential()

model.add(LSTM(128, input_shape=(maxlen, len(chars))))

model.add(Dense(len(chars)))

model.add(Activation('softmax'))

optimizer = RMSprop(lr=0.01)

model.compile(loss='categorical_crossentropy', optimizer=optimizer)

Helper Functions

Taking some inspiration from the official LSTM text generation example from the Keras Team, I created two helper functions to see the improvements the model made whilst training.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

def sample(preds, temperature=1.0):

# helper function to sample an index from a probability array

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

def on_epoch_end(epoch, logs):

# Function invoked at end of each epoch. Prints generated text.

print()

print('----- Generating text after Epoch: %d' % epoch)

start_index = random.randint(0, len(text) - maxlen - 1)

for diversity in [0.2, 0.5, 1.0, 1.2]:

print('----- diversity:', diversity)

generated = ''

sentence = text[start_index: start_index + maxlen]

generated += sentence

print('----- Generating with seed: "' + sentence + '"')

sys.stdout.write(generated)

for i in range(400):

x_pred = np.zeros((1, maxlen, len(chars)))

for t, char in enumerate(sentence):

x_pred[0, t, char_indices[char]] = 1.

preds = model.predict(x_pred, verbose=0)[0]

next_index = sample(preds, diversity)

next_char = indices_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

sys.stdout.write(next_char)

sys.stdout.flush()

print()

print_callback = LambdaCallback(on_epoch_end=on_epoch_end)

I also created two other callback functions to be called on a checkpoint in order to save the model each epoch there is a decrease in the Loss.

1

2

3

4

5

6

from keras.callbacks import ModelCheckpoint

filepath = "weights.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor='loss',

verbose=1, save_best_only=True,

mode='min')

The other callback will reduce the Learning Rate each time the model’s learning plateaus.

1

2

3

4

5

from keras.callbacks import ReduceLROnPlateau

reduce_lr = ReduceLROnPlateau(monitor='loss', factor=0.2,

patience=1, min_lr=0.001)

callbacks = [print_callback, checkpoint, reduce_lr]

Training the model and generating new Text

Here comes the exciting part!!!

All that we have to do is decide some parameters values, namely batch size, and epochs

1

model.fit(x, y, batch_size=128, epochs=100, callbacks=callbacks)

LOOK AT HIM WRITING BY ITSELF! AAAAAAAAAAA IT’S ALIVE! 🤖

All that is really left is to use a function to generate text similar to the on_epoch_end function. It takes a random starting index, take out the next 40 chars from the text and then use them to make predictions. As a parameter it takes the length of the text we want to generate and the diversity of the generated text.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

def generate_text(length, diversity):

# Get random starting text

start_index = random.randint(0, len(text) - maxlen - 1)

generated = ''

sentence = text[start_index: start_index + maxlen]

generated += sentence

for i in range(length):

x_pred = np.zeros((1, maxlen, len(chars)))

for t, char in enumerate(sentence):

x_pred[0, t, char_indices[char]] = 1.

preds = model.predict(x_pred, verbose=0)[0]

next_index = sample(preds, diversity)

next_char = indices_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

return generated

And we can create a 🤖 text by just calling the generate_text function

1

print(generate_text(250, 0.2))

Which gives us our initial text on top of the Page!

Conclusion

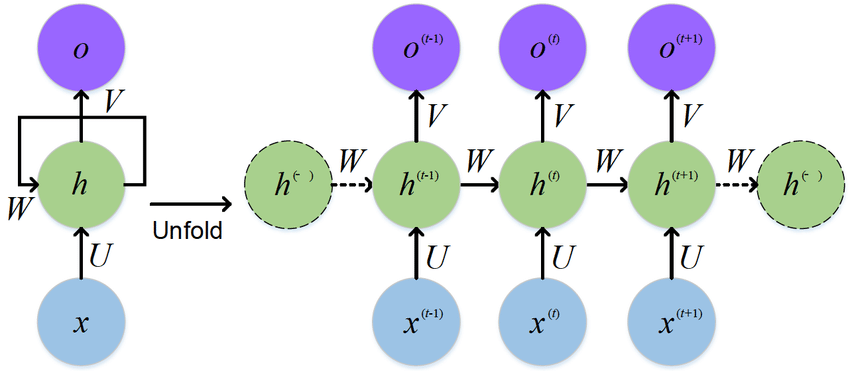

Recurrent Neural Networks are a technique of working with sequential data, because they can remember the last inputs via an internal memory. They achieve state-of-the-art performance on pretty much every sequential problem and are used by most major companies. An RNN can be used to generate text in the style of a specific author.

I do believe a bit more of work could be done to make the model even more sophisticated. Perhaps the tweaking of the network structure (more LSTM-Dense Layers).

If you liked this Page I would recommend this chapter to deepen your knowledge even further, and perhaps I got you excited in the future of artificial inteligence once again!

Recurrent Neural Network ↩